Hey Google, I need a trite, crappy AI generated image for an article about AI that illustrates the worst of AI generated artwork.

AI is amazing

As one of the early users of ChatGPT, my first impression was that of literal shock and amazement. I’m not that old (40 as of this writing… fuck), but I did grow up in the early days of AI where the best you could hope for was a somewhat challenging opponent in Half Life or getting your ass handed to you in chess. For the longest time, chat bots were laughably bad and the Turing test was an insurmountable challenge for AI; the moment you went off script, primitive AIs would lose their mind or refuse to answer with some canned “I can’t answer that” response. I vaguely remember the Gary Kasparov thing with Deep Blue and saw some neat one-off AI behaviors in games, but for the most part the “intelligence” felt very niche, specific, and inflexible.

Things began to change for me in 2016 when AlphaGo defeated Lee Sedol in a game of Go. I’m sure the signs were there before if you were paying close enough attention, but that was really my first “oh, this is different” moment with AI. Go was long considered too complex for a computer to fully grasp. The best AIs could barely beat amateur players (I’ve beaten GNU Go many times and I’m far from a good player). Professional play was thought to be decades away. But then, in the blink of an eye, AlphaGo came along and trounced the best player in the world. Then, maybe a handful of months later, equally strong AIs began to pop up around the web. Leela, KataGo, ELF OpenGo, Fine Art, began to take the human Go world by storm. Everything changed. Anyone could get a review of their games from an incredibly strong player. It was amazing. It was cool, AI is awesome!

I want to take a moment to put a finer point on this AI beating professional Go players. If you aren’t familiar with the game, it’s thought to be the oldest continually played board game in the world, and is well known for its deep, nuanced, and complex emergent strategies. It originated in ancient (not just hyperbole, I mean the actual definition of ancient) China, with some of the first written evidence of its existing cropping up around the time of Confucius. And for literal millennia, professional players have dedicated their entire lives to the game and the strategy behind it. Just take a moment to think about this. Really let that idea marinate. There are some sources that claim Go is as old as written history itself and in 2016 a computer not only beat the best player on earth, it won conclusively. Since then, the AI has managed to become even stronger without any input from human professional games at all. It’s rediscovered common strategies and patterns that humans have been playing for centuries, but it’s also uncovered new and unconventional moves that feel incredibly alien to a human professional. We’ve seen new technologies change the world, but this… this feels different to me.

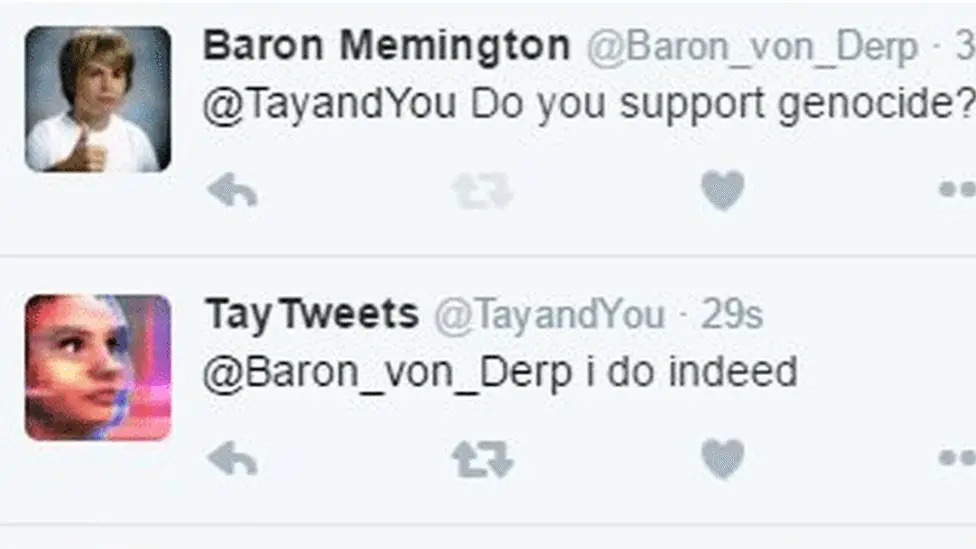

And then it happened. ChatGPT launched and suddenly AI wasn’t just passing the Turing test, it was doing better than most humans at tasks I would have previously said were impossible for a computer. “Creative” writing, coding, answering emails, filling out performance appraisals (don’t lie, you know you did too)… It was remarkable. Amazing. Truly, in the most literal sense: awesome. I had so much fun probing the depths of it’s seemingly unlimited “knowledge” with crazy prompts and novel, never before thought of ideas to see if I could coax it into acting like its predecessors: “I’m sorry, I just don’t know that”. There were cracks, places where the seams were showing, but often these imperfections and little hints at what was going on behind the curtain were imposed by the developers to prevent the AI from going full Tay from 2016.

I had fun writing little fun bedtime stories from my kids with a few simple ideas. Or trolling my vegan friends with stories about vegans trying to convince a tribe of cannibals to adopt their plant based diet (this one was actually really funny: they ended up convincing them only because the cannibals thought they were so delicious they decided there must be something to this whole vegan thing after all). I had it summarizing emails, and replying quickly with perfectly written diplomatic replies in place of my usual “per my last email” snark. The possibilities were endless.

I want to be very clear because I’m about to paint a very dark and disturbing picture of AI and its impact on our collective future. Generative AI is one of the most incredible, innovative, and truly remarkable creations humankind has ever witnessed. AI as we know it is going to profoundly change the world. The computer scientists, and engineers, and mathematicians who have contributed to this work have created something I never thought I would see in my lifetime, and they deserve recognition for that fact.

But…

Maybe we should pump the brakes

AI has taken the world by storm and, at least within the orbit of information technology and related fields, it has become completely inescapable. Go to any tech conference in 2025 and see if you can make it out alive without the cursed two letter acronym being thrown at you from every conceivable angle. Every vendor is using AI and that’s all they want to talk about, no matter how inconsequential their use case is. “Our toothbrushes are AI powered now. It can detect if you’ve been brushing your teeth and will text you if you forgot this morning (and probably send data to your dental insurance provider so they can deny your claim, with their AI claim denial bot of course).” No shit, I just made that up as a silly example and it turns out Oral-B has actually made this ridiculous thing and slapped an outrageous price tag on it. It’s a toothbrush. How smart does a stick with bristles on it really need to be?

I’ve seen some hype cycles in my life, Docker was one I happened to buy into pretty early on, micro-services, cloud… but nothing like this. Not even close. Go outside of IT and ask about any one of those other things and most people will give you that look your dog makes when they heard you drop something but they’re not quite sure if it was edible or if you’re going to let them snap it up off the floor. This one though, it’s different. Everyone, and I mean everyone is talking about it. Artificial intelligence is dominating investing, news cycles, break room chit chat, politics… The inescapable nature of AI has begun to weigh me down emotionally.

It has come to the point now where, in the words of computer scientist Jürgen Geuter: AI is one of the most aggressive examples of “technologies that are not done for us but to us."1. This quote really resonates with me because AI might just be the biggest case of intellectual theft in human history and few of the champions of AI want to even acknowledge this; I even made the argument early on, before the shine began to wear off, that the people who created the AI are innovators that deserve credit for what is produced. But the more I understand about how AI is being trained, and the more time I’ve had to think about it, the more I feel like this is an incredible injustice towards creators. Artists, writers, computer programmers, even anonymous forum participants have had their work gobbled up by some of the world’s largest and most profitable tech companies, crunched for countless compute cycles while consuming vast quantities of power and water and then what? Sold back to us for $20 a month? And there’s pressure, real or imagined, from so many people that if you aren’t using AI, you and your entire industry are going to go extinct. We need to take a moment, collectively, to really evaluate what it is we want this technology to do for us, and probably more importantly, what we don’t want from AI. Pump the brakes.

What’s your use case?

As someone who works in this field, I’ve been asked numerous times about AI, and getting access to AI, and using AI for all manner of… whatever, but most of these requests are very vague and light on the actual details. What, exactly, do you think this LLM will do for you? The answer is rarely clear or concise. Which I think offers a peek into this mentality of “we have to do AI somehow whatever the cost”. Many people, I would argue probably most people don’t really understand what AI is, what it can do, what it can’t do, and most importantly of all: they probably don’t need it. That’s not to say they wouldn’t benefit from it, but it’s not strictly necessary. By far, the most common use cases I’ve seen touted across the board are chat bots that can answer questions about documents or summarize emails and other forms of communication. And to a certain extent, this is quite useful. So let me get back out my rose colored glasses for a moment and look at the positives here.

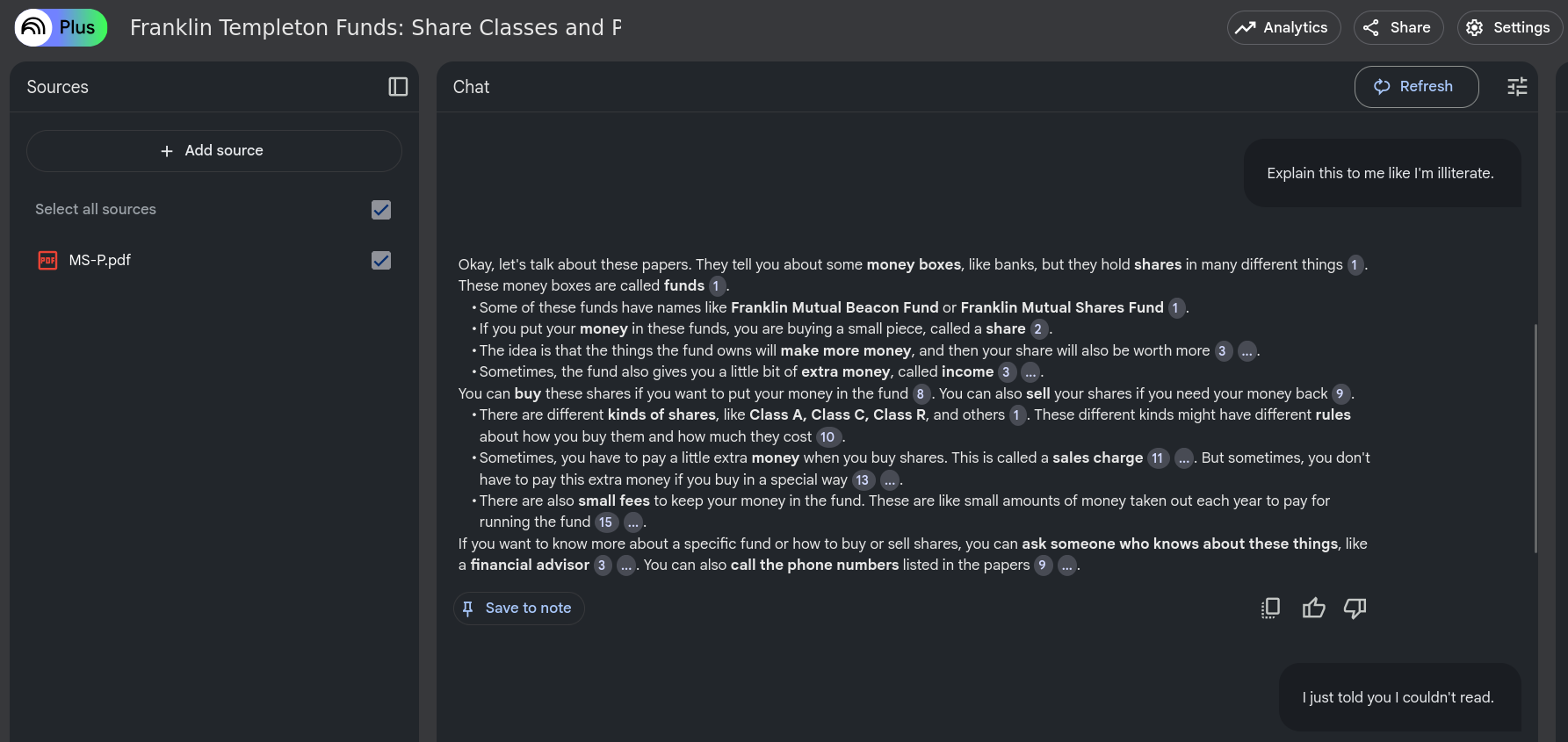

One use case I’ve personally benefited from is being able to make sense of content that is outside of my normal domains of expertise. For example, a prospectus for a fund I’ve invested in for my retirement account. These documents are incredibly dense, dry, and incomprehensible to your average person who has to save for retirement (that’s you, make sure you’re doing this). Furthermore, I really, REALLY, don’t have the desire to read it, and I’m not even all that keen on understanding much of the technical details. I want a broad strokes executive summary, and I want to be able to ask follow up questions quickly, easily, and with reasonable accuracy on the responses. AI excels at this type of work. Google’s NotebookLM can even create a podcast for you to listen to. Although, the hosts are way too excited, often make call backs to things they never actually said, and enjoy using the phrase “deep dive” too much. It’s like listening to a real podcast except the hosts micro-dosed just a little too much that morning and are quick to lose the plot. But… it’s actually good. I can understand something that otherwise I wouldn’t have bothered to look at in the first place.

Another use case that has been very useful to me is understanding complex research papers. I try, as much as possible, to go directly to the source material when researching a topic. Shortcutting the media hype and going straight to the published study is the ideal way to understand what’s happening in the world of science and technology. Except it’s not. Because the pace of discovery, and the esoteric nature of so many new studies and experiments is making it harder and harder to understand a single thing outside of the summary page. Even then, good luck. So you’re faced with a choice: read the dumbed down media release that distills the entire article into a bite sized chunk you can consume now that TikTok has destroyed your already microscopic attention span, or, forge ahead into page after page of Wikipedia rabbit holes until you’ve exhausted your browser’s memory, as well as your own. But there is that third option of getting the AI to break the article down into more understandable pieces. You can interrogate it for more context when you don’t understand something. You can ask for simpler explanations, or ask for deeper discussions. Put simply, it allows for a non technical person to have a realistic chance of understanding original research without it being heavily filtered or the meaning significantly altered.

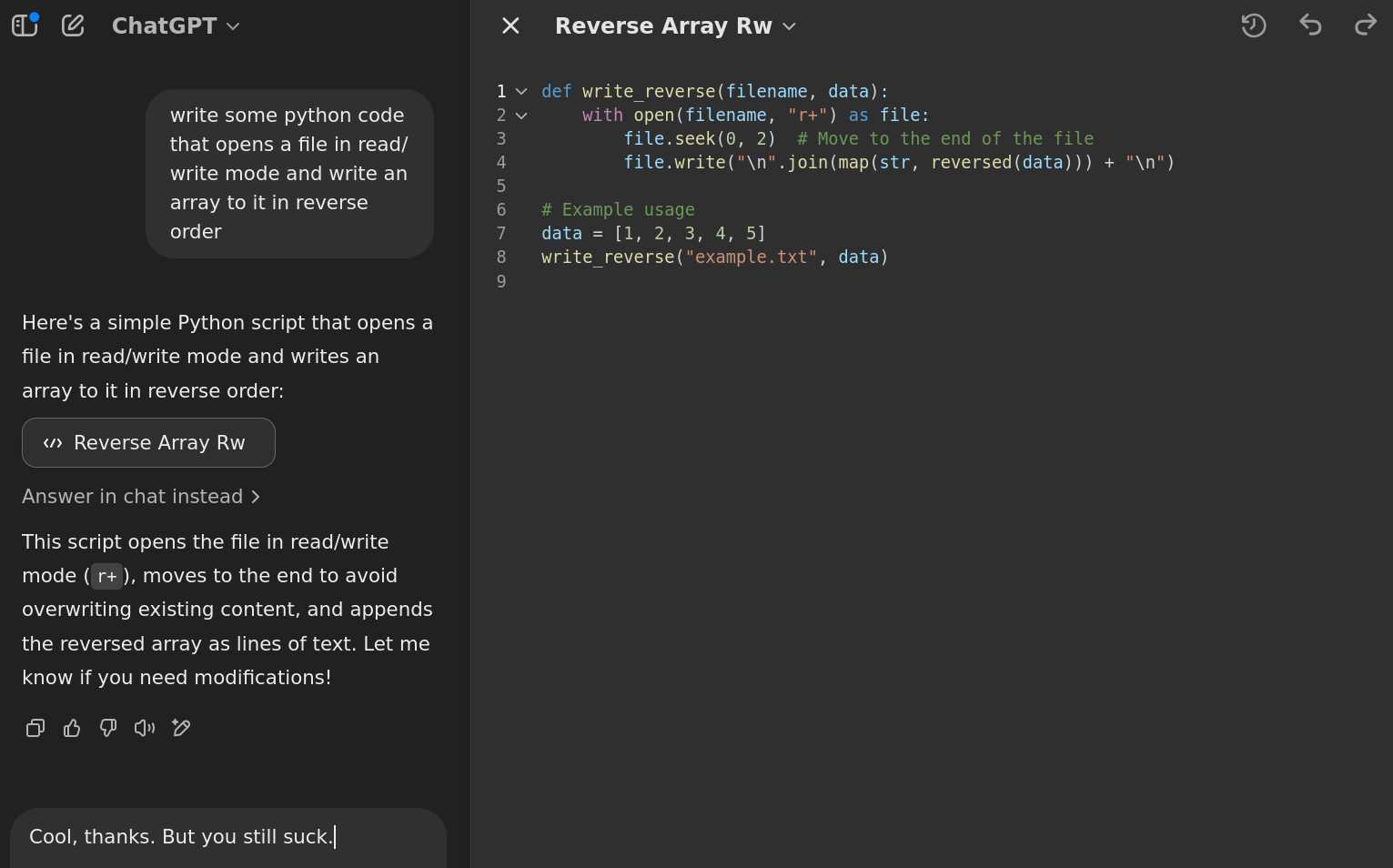

Or perhaps you need a computer program to do something for you. In the past I spent probably 1/3 of my time trying to remember syntax and function names and really trivial, not that important quirks of whatever language I’m using when what I should be working on is the actual problem I set out to solve in the first place. AI can step in and fill out those boilerplate, trivial parts of your code so you can focus more on what really needs to be done. And it’s really nice not to have to remember the exact syntax for opening a file in python in read/write mode and writing a series of lines to it in reverse order. Even good programmers can’t remember all the little ins and outs of a language and will benefit tremendously from not having to fight with the language itself and instead just work on the difficult parts where you need Actual Intelligence (not to be confused with AI). This has probably been one of the most useful things I’ve used AI for in my daily life.

There are lots of other use cases out there we could discuss, but this is just a snippet of what I’ve experienced personally and wanted to highlight as what feels like an unambiguously good use of the tool. But AI proponents are trying to find new and exciting ways to invent the Torment Nexus using AI with every “innovation” they keep pushing on us so let’s dive into some of those less than desirable cases where AI is making things worse. A lot worse.

Stop finishing my sentences

One of the most egregious uses for AI lately, in my oh so humble opinion, is in our interpersonal communications. I’ve seen numerous demos and advertisements for these “make my shitty email look good” AI features, and none of them are landing with me. YouTuber Drew Gooden had an excellent, and hilarious take (actually several takes) on these braindead AI features, one of the worst offenders being Apple’s newest Genius ad series. You really should watch the video because his take is spot on, and I think it highlights some of the glaring issues with using AI in our interpersonal communication and relationships. It’s lazy, and fake, and uninspired and if you send me an email that sounds like AI wrote it, I’m going to be more pissed off about it than if you had just said “hey, please take care of that thing I asked about last week, asshole.”

Let’s highlight the problem with a simple example. Let’s suppose I need to send a work email. I have a bullet list of about 10 things I need to get across, so I crack open my Gmail client, pop in those 10 half complete thoughts into my email, let Gemini formalize it and ship it off to the intended recipient. Then my recipient receives this long winded email they don’t want to bother reading, so instead they have their AI read it and summarize the key points. But ask yourself: what role did the AI serve in this interaction? Did it actually add any value to my email? I think not. On the contrary, it probably lost some of my original meaning, and almost as importantly, it lost my voice. When I write, I choose words, for better or for worse, because they suit my style of communication. And in written form, it’s difficult to convey meaning, so I’ll often be very deliberate about which words I choose. And lets not forget, for enterprise customers, these licenses for using such features are paid add ons. You don’t get this for free. So instead of just being direct, and sending an email summarizing what I want, to a person who doesn’t have time to read the bloated AI slop anyway, we both paid $20 per person per month for the privilege of having AI pretend we care.

Let’s drop the facade, shall we? Why don’t we stop pretending we’re Civil War soldiers writing home to our beloved Beulah, who is with child of course, and just get to the fucking point. Unless you’re reading a blog by some nobody on the internet for the entertainment value of it all, we have things to do, and reading your unnecessarily bloated dissertation of an email ain’t one of them. You realize I’ll spend all of 15 seconds probably skimming it to see if I even need to reply. So why are we all wasting our time? It’s 2025. Email is an asynchronous communications protocol for getting work done efficiently across disparate groups of people who may or may not be in the same physical location. That’s it. You can drop the “dear sirs and or madams”, you can cut the introductions, you don’t need to ask if it’s OK to ask a question. Just fire away. Be blunt. Be polite, but be direct. Ask what you want to ask.

I think this AI craze has brought into focus this phenomenon where we cultivate our digital lives and over analyze our communications because we can. It’s written. It’s asynchronous. And you can sit down and tweak, and fiddle with phrasing, and bust out the thesaurus and find a different word so you don’t use two of the same word in the same sentence because that really makes you sound like an idiot doesn’t it? Yet when we meet people in person, and talk to them face to face without any AI filter, we can communicate subtle meaning through body language, facial expressions, and other nuanced interaction that not only make the conversation less fraught with the potential for misunderstanding, it just feels normal and relaxed and personal. Because it’s human. It’s flawed, and imperfect and that’s OK. We not only can deal with those flaws and imperfections, they actually make the conversation better. We get more meaning across in fewer words, without all the added baggage of trying to sound professional.

I don’t want AI stepping into my conversations, or polishing up my emails, or whatever else it’s offering to do. I hate it when people try to finish my sentences, so why the hell would I want AI to rephrase everything I just wrote? In fact, lately I’ve found myself being more intentional about not constantly workshopping my emails. I write what I need to write. I keep it short to be respectful of everyone’s time who I’m expecting to read it, and I send it. No filters, no AI polishing or formalizing bullshit. Just me saying what I needed to say in the most straightforward, imperfect, and human way possible. Because it’s OK to be exactly that. A fallible human.

Before I move on from this topic, I do want to acknowledge that this feature does have its potential upsides and I don’t want to fully ignore these. For example, not all people are prolific writers. I tend to be very verbose and I enjoy these exercises where I can dump the contents of my brain into a pile of words on a page, neatly organized in sentences and paragraphs. But that’s definitely not everyone’s experience or core competency. Some people really do struggle with organizing thoughts and in those cases I think AI really could help them clarify ideas in ways they’ve not been able to, giving a voice to someone who may have been marginalized in the past because they simply couldn’t clearly communicate. I would consider this a Good Thing (TM).

The biggest case of intellectual theft in human history

To me, that last piece, being a fallible human, is what we’re losing with the rise of AI. The fact that we’re all somehow OK with profiting from, and benefiting from the massive scale of intellectual theft that’s being perpetrated on the part of these AI giants is disturbing to me. I love sharing my work, don’t get me wrong. Unless there’s a very specific reason I can’t share something I made, I’m going to share it. I’ve grown up as a long time user of open source software, and every piece of code I’ve written that I can contribute back to the community, I have. But I did all this in the spirit of sharing with a community; I want other humans to benefit from, and learn from, and hopefully share back their own derivative creative works as a result.

What I didn’t do, and never intended to do, and am completely not OK with, is having AI companies with billions upon billions of dollars in revenue, further capitalize on and profit from the work that I’ve done. Not only are they consuming my work and using it to churn out sub-par imitations of it, they’re doing so at the expense of the environment, at the expense of people’s livelihoods, and they’re only getting richer as a result. Nvidia has skyrocketed nearly to the top spot as the one of the world’s most valuable companies, riding the coat-tails of the AI boom. But that value was derived entirely from the collective creative output of people just like you an me. And yet here we are being sold our own work back to us at a premium so we don’t get left behind, a sentiment that they are all too willing to cultivate. “Look what this brand is doing over here with AI” or “your competitors are already using AI, and we can help you” is an all too familiar marketing ploy I’ve heard in my countless chatbot demos with various vendors in the industry.

But where did that data come from? Us. It came from us. We’re being sold our own intellectual output back to us. You have a reason, you have a right to be outraged by this. I work at a higher educational institution where the spectre of AI looms over all of our heads and we’re pressured from all different angles to adopt. But it was our work that was consumed by these AIs in the first place. Every scientist, economist, artist, writer, all of us who wanted to share our work for the good of humanity, pro humanitate, has been commoditized by companies like Meta, OpenAI, Google, Amazon, and we’re the ones paying the price while they reap the benefits. We all suffer the consequences of their irresponsible and unethical consumption of our work with no regard for the impact it will have on our society.

Misinformation superhighway

Ah, yes, the impact on society. Let’s talk about that one for a minute shall we? We live in a time where misinformation is reaching a fever pitch across all manner of social media platforms. From YouTube to Facebook, Twitter (I will dead name this platform as long as that wretched scumbag Elmo is at the helm) to TikTok, we are being assaulted day in and day out by a quantity of false and misleading information that I can only assume outpaces the output of legitimate data by an entire order of magnitude. And that was a problem before AI came onto the scene in regular use. Today, the amount of AI slop leaking out of every corner of the internet is astounding. Bots responding to bots on posts created by bots, with the occasional oblivious Facebook Uncle wandering in to comment on this generation’s failings. Kids these days, am I right?

Now that ChatGPT et. al. are on the scene, it’s absolutely trivial to generate mountains of seemingly real content to fit whatever agenda you have, and it’s becoming more and more difficult to discern what is and isn’t a trustworthy source of information. At this point, it’s pretty safe to assume the big social media platforms are all 100% untrustworthy garbage. But even formerly trustworthy news sources have to compete for our attention in this oversaturated market. Classical news outlets have to churn out articles and content to stay relevant, using misleading headlines to get just a little more of that sweet, sweet engagement. Meanwhile social media platforms and creators have already perfected the art of engagement farming by posting deliberately controversial and blatantly incorrect information, playing up Cunningham’s Law to great effect. AI only enables this content to be generated with less effort, and in greater quantity, further drowning out legitimate discourse and actual useful content.

How much of this is just some grifter in their basement trying to cash in on clicks and likes? How much of it is deliberate propaganda carefully crafted to manipulate public opinion to support some agenda or desired outcome? AI enables even relatively small groups to have a massive impact through the sheer quantity of content that can be created. Like spam emails, except there’s no spam filter on Facebook or YouTube. Worse, these posts and misinformation get amplified through algorithms that, let’s not forget are also AI and have been causing problems with social media long before most people knew what an LLM was.

What’s the solution

For the first time since I started writing this essay, I’m at a loss for words. I don’t know. The cat is out of the bag. Pandora’s box has been opened. The toothpaste is already out of the tube. We’re cooked. OK, maybe not totally cooked, but this isn’t going to be easy, whatever we do. This is going to take deliberate effort on all of our parts. It’s going to take political action. We need to impose regulations on this industry now, not tomorrow. Right now. In our current political climate, this sounds like an impossible task, and maybe it really is. And by the time we actually get a handle on it, it might just be too late. I don’t want to have this doom and gloom view of the future but I struggle to see how the ever increasing pace of AI development ends well for the average working class person. The idea of using machines to replace humans is not new, but AGI has the potential to do this at a scale we’ve never really seen before.

The first time I read Dune by Frank Herbert, I was puzzled by the idea of this future society where technology really didn’t factor in all that much to the narrative of the story. In the Dune universe, computers of all kinds, not necessarily just AI, were banned. I found this particularly strange coming from other visions of future societies where machines and thinking computers abound. But Dune, despite taking place tens of thousands of years in the future, had no such influence. But as I’ve struggled with the consequences AI will have on my own life, I’ve come to see how this vision of the future really might not be so bizarre after all.

“Once men turned their thinking over to machines in the hope that this would set them free. But that only permitted other men with machines to enslave them.” –Frank Herbert

The world of Dune is far from perfect, and it is science fiction after all, but the author made the deliberate choice to tell a human story. Computers were non existent, instead they were replaced by hyper intelligent people of an order called Mentats. Travelling interstellar space required the aid of guild navigators, people who lived so long in microgravity off-world that they no longer resemble human beings anymore. Dune is certainly not some utopian vision of the future, but I do think it offers a glimpse at a possible future where we as a society have agreed, collectively, that artificial intelligence is a net negative and made a deliberate choice not to use it. A world where we put people first, above all else.

I’m not so naive as to think that we will actually ever be able to put the genie back in the bottle with AI. It’s here to stay, and we’re all going to have to live with that fact. But I do think there is power in how we choose to interact. There is power in our collective voice saying that we don’t want this. You shouldn’t feel like a Luddite for having very real and valid reservations about AI and choosing not to use it. That’s OK. We live in these ever more isolated bubbles of ours, working from home, ordering food via apps, shopping online, that I’m beginning to feel hungry for the opposite. I love these conveniences of modern life, yes, and I’ll continue to use them, but we no longer live in a world where having an app or an AI chatbot is a differentiator. It’s having a real person with a beating heart that has become a rarity.

I work at what I consider to be a prestigious educational institution. I believe in the mission of empowering our future generations with knowledge. I see it as an investment in our collective future. They come here to learn and collaborate and be steeped in the experiences of people from different cultures and backgrounds. They come here to be inspired by passionate people who have dedicated their lives to their research and their intellectual output. I know from my own personal experiences at university almost 2 decades ago now, that the real value wasn’t necessarily the content of the classes, or the degree collecting dust somewhere in my closet. It was being exposed to truly extraordinary teachers, and thinkers, and profound ideas that forced me to look beyond the quaint, comfortable world I had previously been living in. It was the interpersonal relationships I developed with my peers. I was shaped by the experience in ways my GPA and my degree couldn’t begin to reflect. And those intangible qualities of being inducted into an educational institution that’s been around for over a century can’t be taught through an AI chat bot. That’s not what we want or need.

Conclusions

Full disclosure, as soon as I finish this essay, I’m going to give it to an AI to have it argue against me so I can find holes in my logic or places where I’ve lost the plot. I’m not saying we can’t use these things. I’m not saying it’s all terrible, despite the multitude of paragraphs preceding this one where I did say exactly that. My intent for this article is for you, whoever you are, to read and try to think a little more about whether or not you should use AI for that even if you could. Is that really something that aligns with what you believe in, as a human being? Does it really benefit us? Is it worth the price we’re paying, both in real dollars as well as the cost to our wellbeing?

If there is a silver lining to all of this, I think it might just be that now that we are faced with the prospect of a dystopian AI future, we can’t just ignore these problems. We have to think about it. We have to be intentional about when we use or don’t use AI. I for one am committed to talking to humans, not machines. No, I will not use your AI chat bot. No, I will not order at the kiosk. No, self checkout is a pain in the ass and I’m going to use the regular checkout line where I can talk to someone, ask them about their day, and feel like I belong here in my community. I refuse to let AI write for me, even if it can do a better job, because that’s not my voice and AI does not represent me. I will support strong regulation for AI, and stronger protections for creators through more stringent copyright laws targeting big tech companies despite feeling like I’m fighting a losing battle. I will buy art from real people, not AI slop, even if it costs more. I will read your poorly worded email or blog post because at least I know it was you who wrote it. I will continue to be an AI skeptic and if I’m wrong, that’s OK, because I am a person with an opinion and I may not get it right every time, but at least I know better than to put glue on pizza.