Thanks for the feedback

First of all, I appreciate all the feedback I’ve received on the AI essay. I know it was a long one, and the fact that anyone read the damn thing at all is flattering. Thank you, really. I’m going to try to make this short, well, shorter than the first one because I realize in all my rambling, I didn’t clearly make as succinct a point as I had hoped. So here’s the TL;DR version along with a few other points.

TL;DR

Let’s get straight to the point I was trying to make with my post.

For all the good that AI promises to deliver, for all of the miracles it is poised to perform, AI inevitably stands to serve its true masters at the very top above all else.

I was rambling a bit, as I tend to do when I’m jamming out to music and committing thoughts to the page, so I think it’s worth circling back and putting a nice little bow on that idea. You see, I’m not necessarily against the use of AI. I have gripes. I don’t like this idea of filtering everything through AI tools, distilling the qualities I value the most in our interpersonal communications. But AI itself is more or less a good tool if used correctly.

But you know we never will.

And don’t listen to me, I’m just some random person on the internet who happens to take an interest in writing and technology. You can read a far more eloquent and well researched paper by Erik Brynjolfsson. Who is that, you might be asking? He is the, and I’m quoting his bio here: “Senior Fellow at the Institute for Human-Centered AI and Director of the Digital Economy Lab at Stanford University”. But what does he know? Anyway, Erik writes:

As machines become better substitutes for human labor, workers lose economic and political bargaining power and become increasingly dependent on those who control the technology.

I spent a few hundred words getting to this core idea. In his essay, he calls it the “Turing Trap”. My article refers to it as “The Torment Nexus” which I like a lot better, but let’s not judge. I would really encourage you to read the entire thing, it’s well worth the effort. Or if you’re into irony, get an LLM to read it for you.

Lessons never learned

I think of AI as the computer science equivalent of nuclear fission. It is going to catapult us, whether we want it to or not, into a new world where the mere fact of its existence is a threat. Fission was discovered in 1938 and within 6 short years we built a fucking bomb with it and killed hundreds of thousands of Japanese civilians with the most terrifying weapon humanity has ever seen. We sparked an arms race and a cascade of proxy wars that has lasted, arguably, to this very day. We now possess the firepower to destroy all of human civilization and our mutually assured destruction is the only reason we haven’t used them in wartime since the bombings of Hiroshima and Nagasaki.

What makes you think we’re going to be any more responsible when AGI arrives?

The answer, I think, is that we won’t. It’s just that simple. I do think that we are at an inflection point where, if we act now and we take steps to regulate this industry that we know is going to have a tremendous impact on the world we live in, we might stand a chance. But we also have arguably the worst political environment imaginable to enact the type of regulations we need right now. President Musk and angry Facebook Uncle, Trump, have absolutely no appetite for regulation. Worse, they stand only to gain from capitalizing on unregulated AI. World’s shittiest dad, and micro-penis enjoyer Elmo Skunk, has a vested interest in his own crappy AI: Grok. Which, by the way fuck you, Emo, for stealing that word. I liked that word.

I’m exhausted

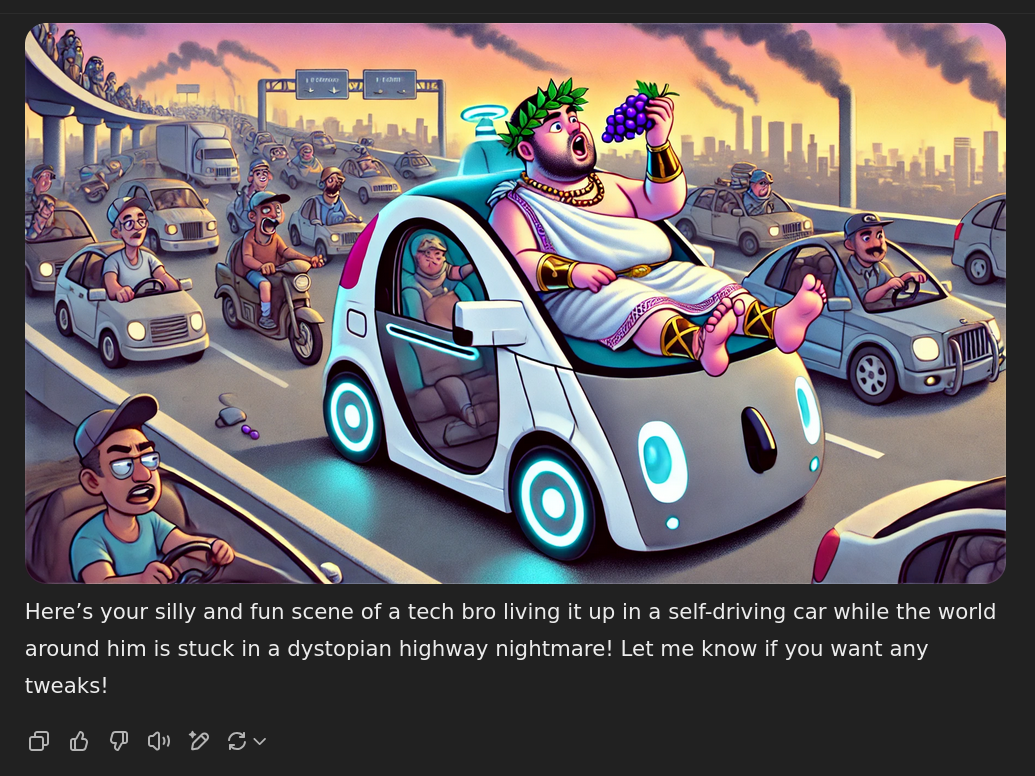

This experience, having LLMs and GenAI foisted on me by tech companies has been truly exhausting. Fighting off the AI proponents with their rosy visions of a tech bro future where everyone rides in a self driving Tesla while enjoying the fabulous wealth they’ve accumulated through wise investments in Doge coin is just miserable. Every day I find myself wondering what the hell my kids are going to inherit when they try to enter the workforce. What future are we creating for them? I’m reminded of a Greek proverb that has always stood out as a kind of litmus test for whether or not we’re on the right track, as a civilization:

A society grows great when old men plant trees whose shade they know they shall never sit in.

We aren’t planting trees here. We’re actively tearing them up and turning them into fuck, I dunno, sawdust for the sake of making sawdust. As long as the profits are good right!? And for what? Just ask yourself: is anything better now that we have these AIs? Has your life really materially improved as a result? I’d say it really hasn’t, and even if we do start reaping the benefits of these strong AIs, who is really going to see those benefits? I don’t think it’s going to be you and me.

I’ll leave you with this absolute gem from ChatGPT: